When comparing HDR10 vs HDR600, the difference is that HDR10 is the base layer specifying 10-bit colors and a specific color gamut of the monitor, while HDR600 is a sub-standard of the VESA DisplayHDR standard that means a screen supports 600 nits of peak luminance, plus other technical characteristics.

HDR has quickly become the dominant monitor standard, offering a higher dynamic range image with greater detail in both extremes of dark and light.

Both HDR10 and HDR600 are seen in marketing materials for monitors and laptops, but the HDR terminology can be so confusing that it is difficult to figure out exactly what these numbers mean in practice.

This article gives a quick overview of the difference between HDR10 and HDR600 to give you a complete understanding of this difference and help you to decide which is going to be most appropriate for your needs.

The Difference Between HDR10 vs HDR600

HDR10 is an open-source standard released by the Consumer Electronics Association in 2015, specifiying a wide color gamut and 10-bit color, and is the most widely used of the HDR formats.

It’s a pretty basic format that doesn’t really tell you much, only that the screen is able to display high dyanamic range images, with plenty of detail in darks and lights, and with good contrast. These monitors are very good for photo editing.

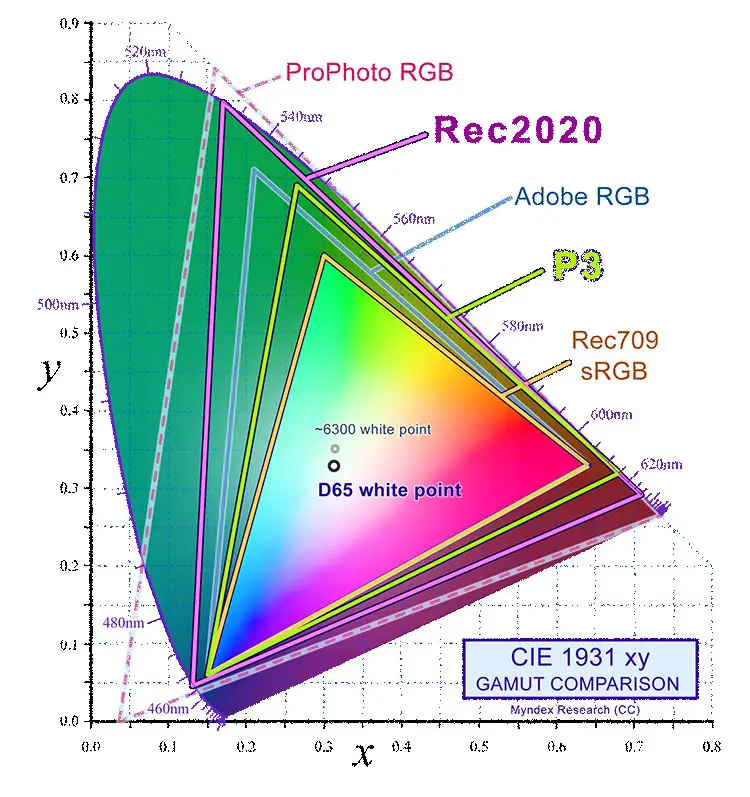

The color gamut of an HDR10 screen should follow the Rec. 2020 specification, which is much wider than the DCI-P3 gamut of HDR600 screens, but of course just because a monitor is following this gamut, doesn’t mean that it is able to display all of its colors.

(Source: Wikimedia Commons)

HDR10 directly competes with HDR10+, which adds dynamic metadata to allow correct dynamic range to be determined by the screen on a frame by frame basis, and Dolby Vision, which is similar to HDR10+, but is a more proprietary format.

HDR600 is a subset of the DisplayHDR format, an official standard developed and supported by VESA, and is not directly comparable with HDR10, although there is some overlap.

DisplayHDR 600 as it is more properly known, is targeted to professional monitors and laptops, and specifies that a screen can display a peak luminance of 600 cd/m2 in a 10% center patch test and flash test, with 350 cd/m2 on a sustained, 30-minute full screen test. You also get local dimming and 90% of the DCI-P3 color gamut, plus 10-bit signal processing.

Pretty much every monitor that uses HDR will have HDR10 support, as it is such a wide-ranging standard with no real independent testing to validate manufacturer’s claims, but only a handful will have HDR600 support, as this is a much more specific, muli-level format that is accredited by VESA.

HDR600 vs DisplayHDR600

One important point to note is that many of the more unscrupulous manufacturers might give their screens HDR600 labels if the screen can hit 600 nits of peak luminance under any circumstances.

Most consumers would assume that this means the screen is therefore DisplayHDR600 certified, but this is not the case.

DisplayHDR600 is a fully validated standard that can only be awarded by VESA, and is much mores stringent than manufacturer’s tests.

While a manufacturer might claim an HDR600 designation from a flash test of the screen that reaches 600 cd/m2, VESA would only award this if the monitor reached 600 cd/m2 in both a 10% center test, a flash test and reached 350 cd/m2 in a 30-minute full screen test.

There are also far more criteria to achieve the VESA standard, which can be viewed here.

HDR600 vs HDR10 Summary

HDR10 means that the screen can support 10-bit color, but does necessarily make a claim about brightness or color uniformity.

HDR600 means that the screen can support a minimum peak brightness of 600 nits and meets other requirements for peak brightness, average brightness, color uniformity and black level.

Read More:

You can see a comparison of two HDR 10 and HDR 600 monitors in the video below, but note that it can be difficult to tell the real differences from a video – you should try to compare the monitors in real life, if possible.

HDR 10 and HDR 600 Specifications

In detail, the most important differences between HDR 10 and HDR 600 are:

HDR10 – the Most Basic HDR Standard

- 10-bit imaging

- Follows Rec. 2020 color gamut, although does not specify how much of the gamut a screen should be able to display

- Static HDR metadata that provides info for dynamic range on a per-video basis

DisplayHDR 600 – for Professional and Enthusiast Laptops and Monitors

- 10-bit imaging

- Follows the smaller DCI-P3 color gamut, but specifies that the screen must hit 90% coverage

- Local dimming, meaning that dimming can be varied across the monitor

- Peak luminance of 600 cd/m2 in 10% center patch test and flash test

- Peak luminance of 350 cd/m2 sustained for 30 minutes over the entire screen

- Minimum black level luminance of 0.1 cd/m2 in screen center

Final Thoughts on HDR10 and 600

You can see from the specifications above that there is very little overlap between HDR10 and HDR600 in practice.

Pretty much any entry-level monitor, excluding those at the real budget end, will support HDR10 nowadays. This simply means that they have better dynamic range and contrast than older, non-HDR monitors, but their performance is variable depending on the manufacturer.

HDR 600 monitors on the other hand, provided they have been verfied by VESA, have very high, provable performance related to color gamut, peak luminance and black point, among many other smaller technical details.

If you really care about your display, you would be therefore much better off going for HDR600 over HDR10, with this being particularly the case for gamers, where HDR 600 can really enhance modern games.

Read More:

How to turn on Hisense TV without remote

What’s the best laptop for photo editing on a budget?

![Insignia Fire TV Stuck On Loading Screen? [FIXED]](https://www.lapseoftheshutter.com/wp-content/uploads/2021/10/insignia-fire-tv-stuck-on-loading-screen-340x226.jpg)

Leave a Reply